Over the past few years, classical convolutional neural networks (cCNNs) have led to remarkable advances in computer vision. Many of these algorithms can now categorize objects in good quality images with high accuracy.

However, in real-world applications, such as autonomous driving or robotics, imaging data rarely includes pictures taken under ideal lighting conditions. Often, the images that CNNs would need to process feature occluded objects, motion distortion, or low signal to noise ratios (SNRs), either as a result of poor image quality or low light levels.

Although cCNNs have also been successfully used to de-noise images and enhance their quality, these networks cannot combine information from multiple frames or video sequences and are hence easily outperformed by humans on low-quality images. Till S. Hartmann, a neuroscience researcher at Harvard Medical School, has recently carried out a study that addresses these limitations, introducing a new CNN approach for analyzing noisy images.

Hartmann, who has a background in neuroscience, has spent over a decade studying how humans perceive and process visual information. In recent years, he became increasingly fascinated by the similarities between deep CNNs used in computer vision and the brain’s visual system.

In the visual cortex, area of the brain specialized in processing visual input, the majority of neural connections are made in lateral and feedback directions. This suggests that there is a lot more to visual processing than the techniques employed by cCNNs. This motivated Hartmann to test convolutional layers that incorporate recurrent processing, which is vital for the human brain’s processing of visual information.

Using recurrent connections within the CNN’s convolutional layers, Hartmann’s approach ensures that networks are better equipped to process pixel noise, such as that present in images taken under poor light conditions. When tested on simulated noisy video sequences, recurrent CNNs (gruCNNs) performed far better than classical approaches, successfully classifying objects in simulated low-quality videos, such as those taken at night.

Adding recurrent connections to a convolutional layer ultimately adds spatially constrained memory, allowing the network to learn how to integrate information over time before the signal is too abstract. This feature can be particularly helpful when there is low signal quality, such as in images that are noisy or taken in poor light conditions.

In his study, Hartmann found that cCNNs performed well on images with high SNRs, gruCNNs, outperformed them on low SNR images. Even adding Bayes-optimal temporal integrations, which allow cCNNs to integrate multiple image frames, did not match gruCNN performance. Hartmann also observed that at low SNRs, gruCNNs predictions had higher confidence levels than those produced by cCNNs.

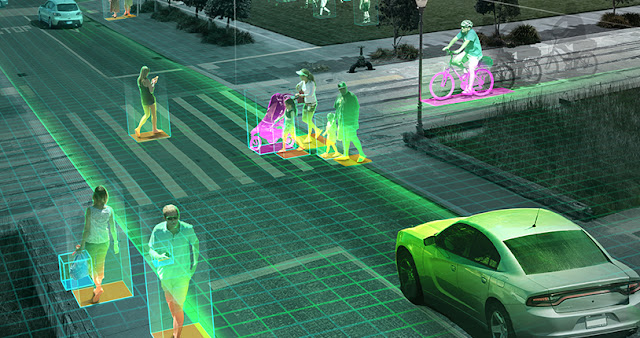

While the human brain has evolved to see in the darkness, most existing CNN are not yet equipped to process blurry or noisy images. By providing networks with the capacity to integrate images over time, the approach devised by Hartmann could eventually enhance computer vision to the point that it matches, or even exceeds, human performance. This could be huge for applications such as self-driving cars and drones, as well as in other situations where a machine needs to ‘see’ under non-ideal lighting conditions.

The study carried out by Hartmann could pave the way for the development of more advanced CNNs that can analyze images taken under poor light conditions. Using recurrent connections in the early stages of neural network processing could vastly improve computer vision tools, overcoming the limitations of classical CNN approaches in processing noisy images or video streams.

As a next step, Hartmann could expand the scope of his research by exploring real-life applications of gruCNNs, testing them in a wide range of real-world scenarios. Potentially, his approach could also be used to enhance the quality of amateur or shaky home videos.